- Unaligned Newsletter

- Posts

- AI Witnesses

AI Witnesses

AI as Independent Observers in Courts and Disputes

Thank you to our Sponsor: Higgsfield AI

Courts and arbitration panels rely on witnesses because human stories help explain what happened. Yet human memory fades, perception varies, and incentives distort. Modern life also leaves a dense trail of machine records. Cars record speed, braking, steering angle, and driver assistance alerts. Phones record location and motion. Buildings record door access logs and camera feeds. Online services record clicks, messages, and edits. The next step goes beyond collecting records and moves toward using AI to organize and interpret records in ways that resemble a witness statement. An AI witness is a system that produces a structured account of events from logs, sensor data, and reconstructions, then presents that account as quasi testimony.

Once a tribunal treats AI outputs as independent observations, dispute resolution begins to change. A traffic crash may come with a machine generated timeline claiming Driver A accelerated seconds before impact while Driver B crossed a lane line. A workplace dispute may include a reconstruction of database access matched against badge swipes and video. An international arbitration may include a model-based account of cargo temperature excursions matched against vessel telemetry and port dwell time. The promise is clarity and speed. The danger is false certainty wrapped in technical polish.

How AI witnesses could appear in everyday cases

Traffic and injury disputes provide a clear entry point because data sources already exist and liability often turns on narrow factual questions. Many newer vehicles store event data around crashes. Many insurers use telematics. Street cameras and storefront cameras capture partial views. A reconstruction system can fuse these sources into a single timeline, infer trajectories, and estimate impact speeds.

A typical AI witness package in a crash dispute might include a synchronized timeline, a physics-based reconstruction, and a confidence report separating direct readings from inferred estimates. A serious version would also provide alternative scenarios that fit available data almost as well, showing uncertainty instead of a single story.

Civil court dynamics change even before trial. Settlement discussions can become anchored to the reconstruction narrative. Criminal court dynamics can shift even earlier. Charging decisions, bail decisions, and plea negotiations can all be influenced by a data driven account that appears precise.

• Vehicle event records can describe braking, speed, and steering changes before impact

• Phone motion sensors and location traces can add context for driver attention and movement

• Video frames can support lane position estimates when timestamps align reliably

• Confidence ranges matter because a reconstruction always includes inference steps

• Competing reconstructions may differ mainly due to assumptions, not due to facts

How AI witnesses could appear in commercial and workplace disputes

Beyond crashes, AI witnesses can emerge in disputes where digital traces already dominate. Employment conflicts often involve access logs, email metadata, security badges, and scheduling records. Fraud cases involve transaction logs, device fingerprints, authentication events, and account changes. Product liability cases can involve sensor telemetry from connected devices, maintenance logs, and software update histories.

A system can pull these traces into a coherent sequence and highlight inconsistencies. A system can also generate a narrative that reads like testimony, such as a minute-by-minute account of access, edits, and communications. Such narratives can shape human interpretation long before any courtroom appearance, especially during internal investigations.

• Access logs can establish who entered a space and when entry occurred

• System audit trails can show file edits, deletions, and permission changes

• Communication metadata can reveal timing patterns even when content remains disputed

• Connected device telemetry can show usage, configuration, and malfunction conditions

• Narrative summaries can steer investigators toward a single storyline too early

Thank you to our Sponsor: Blackbox AI

What changes when AI begins to function like a witness

A human witness can be cross examined. A human witness can be impeached with prior statements. A human witness can admit uncertainty. An AI system cannot feel pressure or guilt, yet questioning remains possible through the people and processes behind the output. That reality makes the term witness slippery. A court may never call a system a witness, yet a reconstruction can function like one by presenting a coherent story with implied authority.

Once a tribunal accepts AI witness material as quasi observational, several shifts follow. Narrative power can move toward the party with better data access and better technical capability. Disputes can become battles over data quality and model validity instead of only human credibility. Resolution speed can increase because structured timelines reduce ambiguity. Risk can increase because a highly produced reconstruction can overpower common sense skepticism, especially when jurors feel unqualified to challenge technical claims.

• Data access becomes a major source of power in litigation and arbitration

• Technical literacy becomes part of credibility, even for nontechnical judges and juries

• Storytelling shifts from human testimony toward visual timelines and probabilistic claims

• Persuasion may favor the side with better presentation rather than better truth seeking

• Speed gains may reduce costs while increasing the chance of uncorrected error

Standards a court might demand before accepting AI witness material

Courts already manage expert evidence and scientific claims through rules that focus on relevance, reliability, and methodology. AI witness outputs would raise similar questions, yet with extra layers: data provenance, model choice, parameter settings, and software integrity.

A careful tribunal would want to know where data came from, how data was cleaned, what assumptions guided the reconstruction, and how sensitive results are to those assumptions. A careful tribunal would also want to know the error rates under comparable conditions and whether independent testing supports the approach.

The most important move is shifting focus from a single reconstructed narrative toward an evidence-supported range of plausible narratives. Without that shift, a reconstruction can become a persuasive story rather than a measured analysis.

• Provenance requirements can demand chain of custody for every data source

• Validation requirements can demand benchmarks, error rates, and limits of applicability

• Sensitivity analysis can show how outcomes change when assumptions change

• Independent replication can reduce reliance on a single vendor or a single expert

• Clear separation between measured values and inferred values can prevent confusion

Cross examination when the “witness” is an algorithm

Cross examination becomes a structured attack on assumptions, inputs, and procedures. Attorneys and experts can probe timestamp alignment, sensor calibration, missing data handling, and the choice of reconstruction technique. A party can ask whether the model favors certain scenarios due to training data bias or design decisions. A party can challenge whether alternative explanations received fair consideration.

Practical cross examination also needs usable tools. A judge and jury need ways to see how changes in assumptions produce different outcomes. Without that transparency, the reconstruction can become immune to meaningful challenge because criticism sounds abstract.

• Questions can focus on timestamp drift, clock synchronization, and data gaps

• Challenges can target calibration, sensor placement, and environmental conditions

• Debate can center on assumption choices, such as friction estimates or visibility limits

• Demonstrations can show scenario ranges rather than a single plotted path

• Disclosure of parameters and preprocessing steps can determine fairness in contest

International arbitration and high value disputes

International arbitration often involves complex causal chains and massive document sets. Delay claims in construction can involve schedules, change orders, site reports, weather records, and subcontractor performance. Shipping disputes can involve route deviations, container temperatures, port congestion, and customs holds. Energy disputes can involve pipeline telemetry, maintenance records, and market pricing triggers.

AI witnesses can help by compressing complexity into a sequence of events and by connecting technical signals to contractual responsibility. Panels may appreciate speed and structure. Parties may worry about over reliance on a model narrative that feels objective yet depends on contestable assumptions.

• Large disputes reward tools that structure evidence across thousands of documents

• Causation analysis can improve when telemetry, schedules, and records align

• Contract interpretation can still dominate, even with perfect reconstruction output

• Panel confidence can rise due to clarity, even when uncertainty remains significant

• Unequal access to data and experts can tilt fairness in cross border settings

Thank you to our Sponsor: YouWare

Risks and failure modes

AI witness material introduces new ways for disputes to go wrong. Overconfidence can arise when a reconstruction looks precise. Bias can enter through training data or through design choices that reflect common driving patterns, common workplace patterns, or common commercial behavior that may not match the disputed event. Security failures can expose sensitive evidence or allow tampering claims. Vendor dependence can create practical lock in where only one provider can interpret the data.

A major risk comes from unequal capacity. Wealthy parties can fund deeper forensic collection, better modeling, and better expert testimony. Less resourced parties can face a narrative that feels unchallengeable.

• Precision looking outputs can create misplaced certainty in fact finders

• Hidden bias can arise from training sets and default assumptions

• Security weaknesses can trigger evidence admissibility fights and mistrust

• Proprietary tooling can limit transparency and replication

• Resource gaps can turn technical advantage into legal advantage

Governance and design principles for fair use

Responsible use requires procedural safeguards and system design discipline. Courts can require standardized disclosure packages that include data sources, preprocessing steps, model parameters, uncertainty ranges, and known limitations. Courts can also require independent audits, reproducibility tests, and clear labeling of what remains measured versus inferred.

Public sector adoption may require open standards so defendants and plaintiffs can challenge results without buying expensive proprietary tooling. Private arbitration may adopt standards through institutional rules, especially in sectors where telemetry and logs already dominate.

• Standard disclosure checklists can prevent selective presentation of favorable outputs

• Open formats can allow independent analysis by opposing experts

• Uncertainty communication can reduce narrative overreach and juror confusion

• Audit trails can support integrity claims and reduce tampering allegations

• Court education programs can raise baseline literacy for judges and clerks

How disputes may change over the next decade

AI witnesses are likely to expand because digital traces keep growing and because tribunals value efficiency. Many cases will settle earlier when parties share a common reconstruction baseline. Some cases will become more technical, with battles over validity replacing battles over memory. Some legal systems may develop certified reconstruction methods for narrow domains, such as traffic incidents, similar to standardized forensic procedures.

The deeper question concerns legitimacy. Public trust in dispute resolution depends on fairness and comprehensibility. A system that produces narratives without meaningful challenge can weaken that trust even when accuracy improves on average. The best path forward treats AI witness material as structured evidence, not as a substitute for human judgment.

Looking to sponsor our Newsletter and Scoble’s X audience?

By sponsoring our newsletter, your company gains exposure to a curated group of AI-focused subscribers which is an audience already engaged in the latest developments and opportunities within the industry. This creates a cost-effective and impactful way to grow awareness, build trust, and position your brand as a leader in AI.

Sponsorship packages include:

Dedicated ad placements in the Unaligned newsletter

Product highlights shared with Scoble’s 500,000+ X followers

Curated video features and exclusive content opportunities

Flexible formats for creative brand storytelling

📩 Interested? Contact [email protected], @samlevin on X, +1-415-827-3870

Just Three Things

According to Scoble and Cronin, the top three relevant and recent happenings

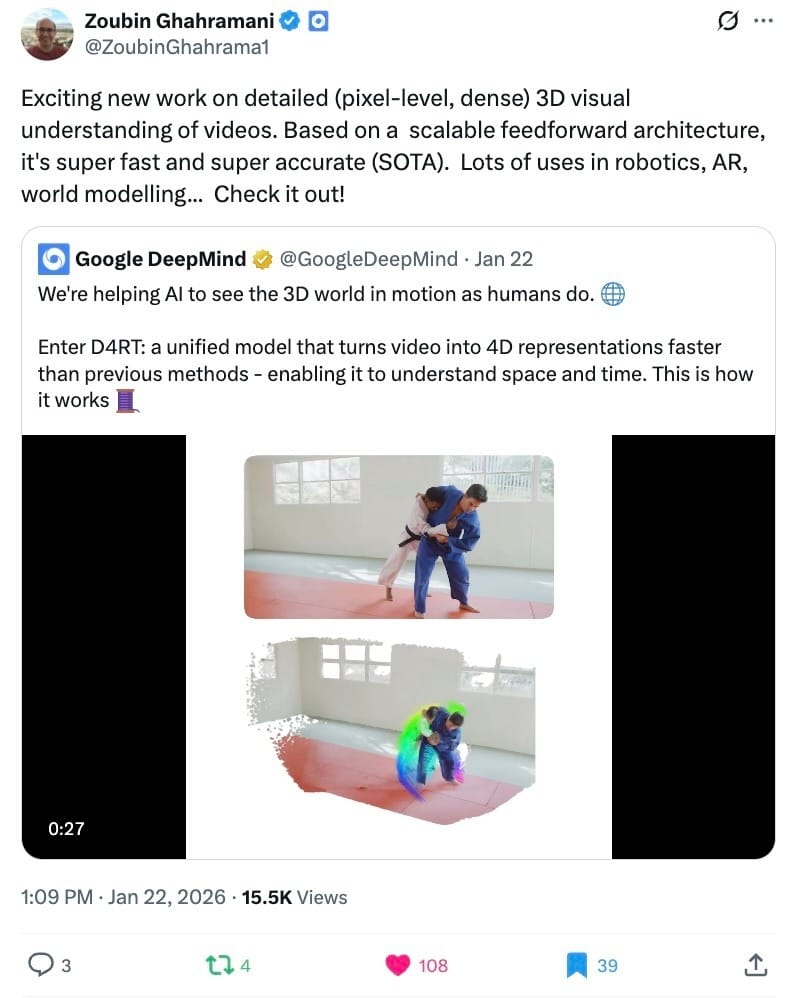

Google DeepMind Makes Three Startup Deals in One Week

Google DeepMind made three startup moves in one week: an acquisition of Common Sense Machines for 2D to 3D object generation, a licensing and talent deal with Hume AI to boost Gemini voice and emotion sensing, and an investment partnership with Japan’s Sakana AI. The Sakana deal links Google models with Sakana projects like AI Scientist and ALE Agent, aiming at scientific discovery plus secure offerings for finance and government, while also strengthening Google’s position in the Japanese AI market. The Decoder

Clawdbot is All Over X

Clawdbot is an open source personal AI assistant that runs locally, connects through common chat apps, can use many LLM providers, keeps persistent memory, and can control the system by running commands and installing new skills, allowing new capabilities to be added through conversation. What is Clawdbot

Gemini Powered Siri May Debut in February

Apple is expected to reveal a new Siri update in the second half of February that uses Google’s Gemini models, aiming to deliver the more capable, task completing assistant Apple promised in 2024 by using personal data and on screen context. A larger, more conversational Siri upgrade is also rumored for June, potentially running on Google’s cloud infrastructure as Apple resets its AI direction through the Google partnership and leadership changes. TechCrunch

Scoble’s Top Five X Posts