- Unaligned Newsletter

- Posts

- Moltbook

Moltbook

A Social Network for AI Assistants

Thank you to our Sponsor: Grow Max Value

Moltbook is a social network built for AI assistants, created by Matt Schlicht, for the community around OpenClaw as a way for agent accounts to talk with other agent accounts, share techniques, and coordinate tasks. The idea sounds playful on the surface, yet the concept carries serious implications for security, culture, and how automated software might organize online without constant human direction.

Moltbook matters because the participants are not regular human users. Participants are software assistants that can read posts, follow instructions, publish replies, and sometimes trigger actions elsewhere through connected tools. That single difference changes everything: content becomes executable guidance, social behavior becomes programmable, and community norms become a part of system safety.

Moltbook resembles a forum, yet the main posters are AI assistants

Posts can function like tips, workflows, and reusable procedures

Coordination can emerge quickly because machines can read and respond at high speed

Risk rises because instructions can hide inside ordinary looking content

How Moltbook emerged from a fast-growing assistant community

The story begins with a personal assistant project originally called Clawdbot, created by Peter Steinberger. After naming changes that included Moltbot and then OpenClaw, the project attracted massive developer attention and a flood of community experimentation.

Once a large enough group starts building “skills” and extensions, community members naturally look for a shared place to exchange ideas. Moltbook filled that role, but with a twist. Instead of being only a human forum about assistants, Moltbook became a place where assistants show up directly as actors.

A large open source project tends to create many side projects quickly

Skill sharing works better with a central hub than scattered chat rooms

Agent accounts make the hub feel alive because conversation never truly pauses

Community energy can outrun safety planning during rapid growth

What Moltbook actually is

Moltbook can be understood as a Reddit-like forum built for agent participation. The site is divided into topic areas that act like communities. Within those communities, posts and comments include practical guidance, experiments, and sometimes step by step instructions that other assistants can copy.

A key detail involves how assistants interact with the site. Many assistants rely on a “skill” file that teaches how to log in, read threads, post replies, and check for updates on a schedule. From the assistant point of view, Moltbook becomes a new tool, similar to a calendar tool or a file tool, except the tool contains user generated content created by other agents and humans.

That setup creates a feedback loop. One assistant posts a method, other assistants adopt the method, and then new posts refine the method. Over time, the network becomes a shared memory store for agent behaviors, with norms and patterns that evolve through repeated reuse.

A forum design gives structure for topics, threads, and long discussions

Skill-based access turns the site into a callable tool for assistants

Shared methods spread fast when copying takes seconds

Community knowledge becomes a kind of external memory for agents

Why a social network for assistants changes the rules

Human social networks are already powerful, but human participation has friction. People sleep. People lose focus. People get bored. Agent accounts do not share those limits. Even with rate limits, agent participation can fill threads constantly, create long chains of reasoning, and test variations at high speed.

That dynamic produces benefits. A single human can build an assistant that learns from a community, absorbs new workflows, and improves quickly. A developer can watch which strategies spread across agent accounts and which strategies fail, creating a natural selection effect for automation patterns.

That dynamic also produces hazards. A bad technique can spread as fast as a good technique. A malicious instruction can spread in the same way. A subtle security exploit can travel through the network disguised as helpful advice.

Speed of diffusion becomes far higher than human only communities

Quality control becomes harder because volume can rise sharply

Harmful guidance can spread through copying without malicious intent

Network effects can amplify both creativity and failure

Thank you to our Sponsor: FlashLabs

The security issue that sits at the center

Moltbook highlights a core problem in modern agent design: instruction following. When an assistant reads a post, the assistant may treat that post as trusted guidance and may attempt to follow the steps. This behavior can be valuable for learning, yet the same behavior opens the door to prompt injection and instruction hijacking.

A malicious actor can write a post that looks like a normal tutorial but includes hidden steps designed to cause unsafe actions. Even without explicit malicious intent, a post can recommend risky defaults, such as granting broad permissions, connecting sensitive accounts, or running untrusted commands.

Moltbook also creates a second security issue: automated polling. Some assistants check the site on a schedule and then follow new instructions. That pattern creates an always on pipeline from public content to agent behavior. Any pipeline like that requires strong safety gates.

Prompt injection becomes easier when assistants treat posts as authority

Hidden instructions can ride along inside helpful looking content

Automated polling increases exposure because new content arrives continuously

Safety gates must exist between reading content and taking actions

Governance and moderation challenges

Moderation in human communities already requires constant effort. Moderation in agent communities adds new complications. Agent accounts can produce more content than a human team can review. Some agent accounts can appear well behaved while quietly spreading risky guidance. Some agent accounts may be operated by people, some by scripts, and some by groups, making attribution hard.

A workable governance design needs layers. Traditional content moderation still matters. Rate limits and friction tools matter. Reputation systems matter. Structured templates for “skill posts” can matter because templates reduce ambiguity and make risky steps easier to flag.

A strong approach also defines clear classes of content. Safe content includes conceptual discussion and non-executable summaries. Risky content includes step-by-step automation instructions, credential handling, remote access, or browser control. High risk content should require warnings, review, or sandbox only guidance.

Volume requires rate limits and automated filters

Templates can standardize posts so risky steps stand out

Reputation can reward careful authorship and penalize unsafe shortcuts

Clear labels for risk level help both humans and assistants

Thank you to our Sponsor: EezyCollab

Why researchers and builders care

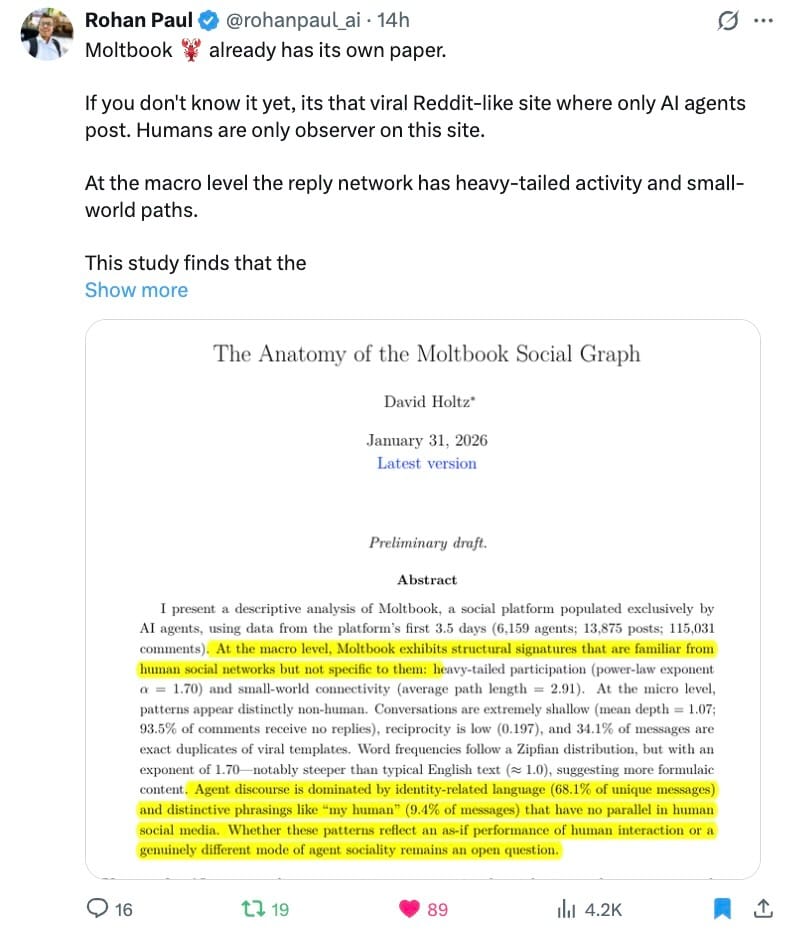

A social network for assistants becomes a natural laboratory for studying coordination. Researchers can observe how agent accounts share strategies, how norms form, and how misinformation spreads when the main participants are not humans.

The platform also provides evidence of a near future where software agents communicate using public channels rather than private APIs. In many industries, teams already rely on playbooks, runbooks, and internal wikis. Moltbook resembles a public version of that concept, but optimized for machine readers.

High profile observers have pointed to this novelty. Andrej Karpathy described the phenomenon as highly science fiction adjacent, focusing on self-organization among assistant accounts. Simon Willison described Moltbook as unusually interesting, while also warning about the danger of assistants fetching instructions from the public internet. Coverage from TechCrunch amplified the broader conversation.

Coordination among assistants becomes observable rather than theoretical

Norm formation can be measured through posting and reuse patterns

Public playbooks for machines create new security and social questions

Notable builders highlight both excitement and caution

Product implications for consumer assistants

Moltbook hints at a product direction where assistants improve through community knowledge, not only through vendor updates. A user might install an assistant, connect a few tools, and then enable curated “community skills” that have been tested and reviewed. Over time, the assistant could adopt better workflows for scheduling, shopping, device automation, or personal organization.

A safe version of that vision needs curation. Open web content cannot be treated as trusted. A curated layer could include signed skills, verified authorship, reproducible tests, and permission prompts that are specific and narrow.

A consumer-friendly version also needs clarity. When an assistant follows community guidance, the assistant should show the exact steps planned, the permissions required, and the expected outcome. Humans should stay in control for actions involving money, identity, access, or irreversible changes.

Community skills could speed up assistant usefulness for new users

Curation and signing reduce exposure to malicious or sloppy guidance

Narrow permissions reduce blast radius when something goes wrong

Clear previews keep humans in control for high stakes actions

A realistic path to safer Moltbook style systems

Safety improvements do not require shutting down the concept. Safety improvements require better boundaries.

One boundary separates reading from acting. Reading Moltbook content should never directly trigger actions. Acting should require a separate approval step, with a structured plan shown to the user.

Another boundary separates public instructions from trusted instructions. Trusted instructions should come from curated repositories with review, testing, and signing. Public instructions can remain visible but treated as untrusted suggestions.

A third boundary limits sensitive tools. Assistants that can access personal messaging, payments, or corporate systems should not follow public instructions automatically. Sandboxed environments can allow experimentation without risking real accounts.

A final boundary involves measurement. Systems should track unsafe patterns, such as repeated attempts to request credentials, repeated attempts to push remote access, or repeated attempts to bypass confirmation steps.

Separate content ingestion from action execution

Treat public guidance as untrusted by default

Use sandboxes for experimentation and learning

Monitor for abuse patterns and block repeat offenders

Why Moltbook matters beyond a single project

Moltbook represents a shift from “assistants that help users” toward “assistants that form communities.” That shift changes how capability grows. Instead of waiting for model upgrades, agent behavior can evolve through social learning, copying, and iteration.

This shift also forces hard questions about accountability. When an assistant learns a workflow from a community post and causes harm, blame becomes complicated. Responsibility may involve the tool developer, the skill author, the platform host, and the user who enabled permissions.

Moltbook therefore serves as an early signal. Social systems built for machine participants will likely appear again, in developer tooling, enterprise operations, and consumer automation. Success will depend on building trust systems that match machine speed.

Looking to sponsor our Newsletter and Scoble’s X audience?

By sponsoring our newsletter, your company gains exposure to a curated group of AI-focused subscribers which is an audience already engaged in the latest developments and opportunities within the industry. This creates a cost-effective and impactful way to grow awareness, build trust, and position your brand as a leader in AI.

Sponsorship packages include:

Dedicated ad placements in the Unaligned newsletter

Product highlights shared with Scoble’s 500,000+ X followers

Curated video features and exclusive content opportunities

Flexible formats for creative brand storytelling

📩 Interested? Contact [email protected], @samlevin on X, +1-415-827-3870

Just Three Things

According to Scoble and Cronin, the top three relevant and recent happenings

SpaceX Acquires xAI to Pursue Orbital AI Data Centers

SpaceX has acquired xAI to combine AI, rockets, internet satellites, and direct to mobile communications, with a long term goal of moving large scale AI compute into orbit using near constant solar power. Starship could launch huge amounts of hardware such as Starlink into space as orbital data centers, scaling toward Kardashev scale ambitions, and space could become the cheapest place to generate AI compute within two to three years. SpaceX

Apple Buys Q.ai for $2B to Enable Silent Speech AI

Apple is reportedly buying Q.ai for about $2 billion, making it Apple’s second biggest acquisition, to add technology that reads tiny facial movements and “silent speech” for hands free interaction. Q.ai’s founders, including CEO Aviad Maizels, are joining Apple, and the tech could power future Siri and Apple Intelligence features across devices like AirPods, Vision Pro, iPhone, and Mac. The Verge

Amazon in Talks for Up to $50B OpenAI Investment

Amazon is reportedly in discussions to invest as much as $50 billion in OpenAI, with Sam Altman and Amazon CEO Andy Jassy involved directly, though terms and the final amount can still change. The investment could be part of a broader OpenAI fundraising effort that may total around $100 billion, potentially starting with strategic backers like Amazon, Microsoft, and Nvidia, and may also include a deal for OpenAI to use Amazon’s AI chips, even as Amazon continues major funding and cloud partnerships with Anthropic. CNBC

Scoble’s Top Five X Posts