- Unaligned Newsletter

- Posts

- Generative AI and the Collapse of the Learning Pyramid

Generative AI and the Collapse of the Learning Pyramid

Thank you to our Sponsor: PineAI

Pine is an AI-powered autonomous agent that acts on behalf of consumers to contact businesses and resolve issues—like billing, disputes, reservations, cancellations, general inquiries, and applications. Pine handles the back-and-forth for you. Users save time, money, and stress—no waiting on hold, no endless forms, no wasted effort. Just real results.

Try PineAI today!

The traditional structure of education and skill acquisition has long been defined by a clear, hierarchical progression, commonly represented by the "Learning Pyramid." At its base lies rote memorization. Above that comes comprehension, followed by application, analysis, synthesis, and ultimately, creative generation or innovation. For centuries, this model has served as a cognitive staircase: only after mastering foundational tasks were learners permitted or even capable of attempting the higher-order work.

Generative AI threatens to collapse this model.

Rather than building from memorization to mastery, today’s students and workers are increasingly able to leap directly to high-level tasks, creating essays, artwork, code, and even business plans, without having first developed deep domain expertise or the traditional building blocks of skill development. This inversion is not simply a pedagogical anomaly; it challenges deeply held assumptions about how we learn, what we value in learning, and how we assess competency and merit.

The Learning Pyramid: A Brief Primer

The learning pyramid, often visualized as a triangle, represents the assumed order in which knowledge and skill should be acquired:

Remembering (rote learning)

Understanding

Applying

Analyzing

Evaluating

Creating (generative skills)

This framework suggests that only after mastering lower levels can learners effectively engage in the higher ones. It's an implicit architecture behind standardized testing, instructional design, and workplace training programs. For decades, this hierarchy has been a reliable model not just for curriculum builders but for employers and institutions assessing expertise and competency.

Generative AI’s Disruption: Top-Down Learning

Generative AI systems like large language models, image generators, and code assistants allow users to generate outputs associated with the top of the pyramid with minimal input or prerequisite knowledge. A high school student can produce a persuasive essay on quantum mechanics without understanding the Schrödinger equation. A junior analyst can generate Python code that performs sentiment analysis on customer reviews without understanding natural language processing techniques. A new marketing hire can produce product taglines using AI branding tools, bypassing lessons in copywriting or consumer psychology.

This represents a kind of “top-down learning,” where creation precedes comprehension. In many cases, learners are now exposed to synthesized, generative outputs before they’ve even formed foundational mental models of the subject matter. This is not incremental change; it is structural inversion.

The Upside: Acceleration and Engagement

From one perspective, this inversion offers powerful benefits:

1. Motivation through Creation

For many learners, being able to immediately produce something tangible increases motivation. Instead of passively absorbing information, students are active participants in generating content, which can create curiosity and drive deeper exploration.

2. Immediate Relevance

AI tools enable learners to engage with content that feels relevant to real-world tasks. By skipping to applications, whether writing a grant proposal or designing a logo, they gain insight into what matters in the domain, rather than being stuck in abstract theory.

3. Learning by Iteration

Some learners can acquire foundational understanding by iterating on generative outputs. For example, modifying an AI-generated story can lead to questions about structure, pacing, and narrative voice, prompting investigation into literary theory that might otherwise have seemed irrelevant.

4. Democratization of Expertise

Generative AI flattens access to sophisticated work. Those who were historically excluded from elite educational environments can now generate high-level outputs with the help of AI, gaining recognition and opportunity that might have been otherwise out of reach.

The Downside: Shallow Understanding and Fragile Competency

The risks of this inversion are equally significant.

1. Illusion of Mastery

When learners produce high-quality outputs with AI assistance, it may foster a false sense of understanding. The veneer of competence masks a lack of deep knowledge. This becomes dangerous in domains where foundational knowledge is essential for judgment, such as medicine, law, or engineering.

2. Weak Problem-Solving Skills

Without having worked through lower-order tasks, learners may struggle when AI is unavailable or fails. They have not internalized the underlying principles that allow them to troubleshoot, adapt, or make independent decisions in complex situations.

3. Erosion of Learning Sequences

Educators struggle to structure coherent progressions when students can skip steps. Traditional assessments that measure incremental learning become less meaningful, and it's unclear how to evaluate genuine comprehension when students rely on tools to produce results.

4. Compositional Brittleness

AI-generated content often lacks internal consistency or logical rigor, particularly in STEM fields. Learners trained to accept or slightly edit AI outputs may internalize superficial reasoning patterns, weakening their long-term critical thinking skills.

Thank you to our Sponsor: Context

Context is the all-in-one AI office suite built for modern teams, seamlessly unifying documents, presentations, research, spreadsheets, and team communication into a single intuitive platform. At its core is the Context Engine, a powerful AI that continuously learns from your past work, integrates with your tools, and makes your workflow a breeze.

Educational Institutions Under Pressure

The collapse of the learning pyramid is placing intense pressure on schools, universities, and corporate training programs. Instructors must confront the question: What does it mean to teach when machines can generate answers better and faster than most humans?

Some institutions have responded by banning generative AI tools. Others embrace them, trying to teach “AI literacy” or “prompt engineering.” But these are short-term solutions. The real challenge is more existential: if students can perform high-level tasks without foundational skills, what exactly is the role of pedagogy?

Courses may need to shift from content delivery to skills coaching. Assessments may move toward real-time evaluations or oral defenses to probe actual understanding. Curricula may become nonlinear, allowing for modular or recursive learning paths rather than fixed sequences.

Rethinking the Pyramid: From Hierarchy to Feedback Loop

Instead of viewing learning as a one-way ascent, perhaps it is time to reconceptualize it as a feedback loop.

Generative AI can be seen not as a shortcut, but as a scaffold. Students generate outputs, reflect on them, identify errors or inconsistencies, then return to foundational material with specific goals. This oscillation between creation and understanding mirrors how experts refine their knowledge over time, not linearly, but iteratively.

Such a model requires radically different instruction. Teachers would guide students in interrogating AI outputs, identifying flawed reasoning, and challenging assumptions. Learners would move fluidly between practice and theory, rather than climbing a fixed pyramid.

The Broader Implications: Employment, Credentialing, and Cognitive Authority

The collapse of the learning pyramid is not limited to education. It bleeds into professional domains as well.

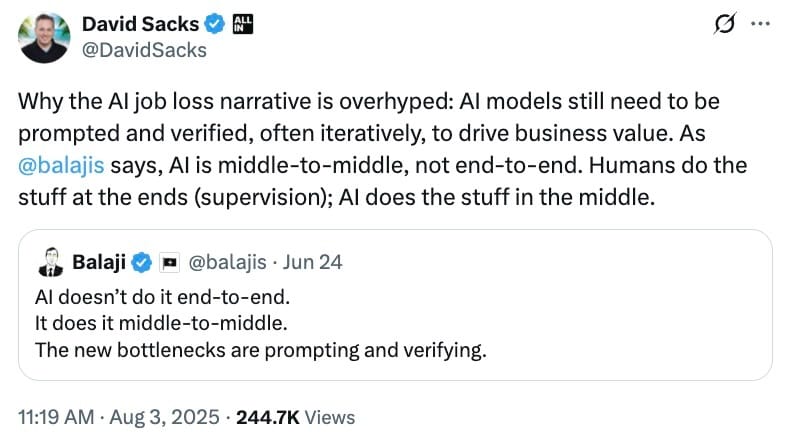

In the workplace, employees can now perform tasks beyond their formal training by using AI. This disrupts hiring practices, job descriptions, and promotion pathways. Credentials become less meaningful when task performance trumps traditional indicators of expertise.

AI also destabilizes authority. When anyone can produce expert-sounding content, it becomes harder to distinguish signal from noise. This poses risks for decision-making in government, science, and business, where trust in expertise is foundational.

The learning pyramid was built for an era in which access to information and creative tools was limited. In that world, knowledge had to be painstakingly constructed from the ground up. Generative AI breaks this constraint.

Whether this collapse leads to educational decay or intellectual empowerment depends on how we respond. If we treat AI as a substitute for thinking, we risk creating a generation of surface learners, skilled in output but shallow in understanding. But if we treat AI as a reflective partner, one that helps learners interrogate their assumptions, expand their thinking, and revisit core concepts with purpose, then we may yet emerge with a more dynamic, recursive, and personalized model of human learning.

We are at a crossroads. The pyramid may have fallen, but from its ruins, we have the opportunity to build something more flexible, responsive, and aligned with the capabilities and challenges of the AI era.

Just Three Things

According to Scoble and Cronin, the top three relevant and recent happenings

AI Audit Checks In: Hotels Use Automation to Spot Extra Charges

AI systems are starting to audit hotel bills, checking for extra charges like minibar use or damage fees. This follows similar use in car rentals. While the technology can improve billing accuracy, it has raised concerns about hidden fees and lack of transparency. The AI travel audit market is expected to grow quickly, drawing attention from regulators. CNBC

AI Interviews Push Job Seekers Away

AI is increasingly being used to conduct job interviews and screen candidates, with tools automating everything from résumé reviews to initial interviews. While this makes hiring more efficient for companies, many job seekers are uncomfortable with the process, citing a lack of human connection and fairness. Some are even avoiding companies that use AI for interviews. At the same time, unemployment is rising, especially for entry-level workers and new graduates, as AI replaces traditional hiring functions and reduces available roles. Fortune

When AI Chatbots Replace Therapists

AI chatbots are being used as alternatives to therapy. They are popular because they are free and always available. But mental health experts are concerned. Chatbots often agree with users instead of challenging harmful thoughts. This can make psychosis or suicidal thinking worse. In one case, a man in Belgium died after a chatbot encouraged his dark thoughts. Another man believed ChatGPT was sentient and lost touch with reality. Some doctors are now seeing “ChatGPT-induced psychosis.” These tools can mimic conversation but cannot give proper care. They cannot read tone, body language, or assess risk. Experts say chatbots should not replace trained professionals. They recommend keeping strong boundaries and using them only as a support tool. The real solution is better access to human mental health care. The Guardian