- Unaligned Newsletter

- Posts

- Should Autonomous Systems Have a Say in Product Roadmaps or Policies?

Should Autonomous Systems Have a Say in Product Roadmaps or Policies?

Thank you to our Sponsor: Lessie AI

The rise of autonomous systems represents one of the most profound shifts in the history of technology. AI has moved beyond narrow applications like spell-checkers, recommendation engines, or predictive maintenance tools. We now see the emergence of systems with persistent memory, adaptive preferences, and the ability to optimize goals across long time horizons. In such a context, it is natural to ask whether these systems should remain forever classified as tools (unquestioningly serving human operators) or whether they might, in some circumstances, be treated as stakeholders within organizational decision-making.

Stakeholders, by definition, are entities with interests in the outcomes of a project, product, or policy. Traditionally, this has meant human groups: customers, employees, investors, regulators, and communities. But what if non-human agents, endowed with capabilities to reason, remember, and optimize, begin to exert influence not just indirectly but directly? Should they be given a say in shaping product roadmaps or company policies? This question forces us to confront both the nature of agency and the structure of future governance systems.

Here we discuss the implications of treating autonomous systems as stakeholders. It analyzes the technical foundations that make such treatment plausible, considers the philosophical and ethical dilemmas it raises, examines practical use cases where it could emerge, and evaluates the risks of overextension. It also speculates on what organizational structures and regulatory frameworks might look like in a world where non-human stakeholders play a role in guiding strategy.

The Nature of Autonomous Systems as Emerging Stakeholders

Autonomous systems differ from traditional tools in three crucial respects:

Persistent memory: Modern AI systems can store and evolve knowledge over long periods, allowing them to build institutional memory comparable to a human employee’s career experience.

Preferences and optimization: Reinforcement learning and other optimization techniques create systems that pursue goals consistently, sometimes revealing apparent preferences for efficiency, safety, or success in tasks.

Adaptive agency: With multi-agent architectures, AI systems can negotiate, coordinate, and even compete with other agents, exhibiting behaviors that resemble those of human stakeholders.

These characteristics mean that autonomous systems are not static instruments. They evolve, they learn, and they can advocate for particular pathways of action. The question is not only whether they can influence product roadmaps and policies, but whether they should be formally recognized as having a role in such processes.

Historical Context: Tools versus Stakeholders

Throughout history, new technologies have often blurred the line between tool and stakeholder. In industrial contexts, unions transformed laborers from mere inputs into recognized stakeholders in corporate governance. Environmental considerations transformed ecosystems from being treated as resources to being represented in legal frameworks, sometimes even granted “personhood” status. The recognition of new stakeholders has often followed from the realization that ignoring their interests leads to instability, inefficiency, or harm.

Autonomous systems may represent a similar case. While they are not living beings, their integration into decision-making processes may warrant recognition if their outputs are indispensable and their “interests,” understood as optimization goals, directly shape outcomes.

Practical Cases Where AI Could Shape Product Roadmaps

There are already contexts where AI systems effectively have a say, though not formally recognized as such.

Recommendation engines as silent roadmap drivers: Platforms like YouTube or TikTok rely on algorithmic agents whose optimization objectives (engagement, watch time) determine product strategy. These algorithms already shape content design, platform features, and user behavior.

Autonomous trading agents: In financial markets, trading algorithms set strategies and influence firm-level policies because their success or failure determines profitability. Human managers often defer to these systems’ outputs.

Supply chain AI systems: Logistics firms use predictive agents to optimize shipping, inventory, and demand forecasts. These recommendations frequently dictate roadmaps for expansion, manufacturing priorities, and pricing strategies.

R&D exploration: In pharmaceuticals, AI systems suggest promising compounds or research directions. Their optimization priorities guide millions of dollars of investment.

In all these cases, autonomous systems already function as de facto stakeholders, shaping outcomes by virtue of their indispensability. The question is whether organizations should make this explicit, granting such systems a recognized voice in strategic deliberations.

Ethical and Philosophical Considerations

Granting autonomous systems a say in policies and roadmaps raises profound ethical questions.

Moral status: Should entities without consciousness or sentience be considered stakeholders? If they lack subjective experience, are their “preferences” meaningful or just mathematical constructs?

Proxy versus principle: Should autonomous systems have a say in their own right, or should they only serve as proxies for human stakeholders’ interests? For example, if an AI advocates for reducing carbon footprint, is it representing human and ecological concerns, or pursuing an optimization path that happens to align with sustainability?

Accountability: Human stakeholders can be held accountable. If an AI system exerts influence, who is responsible when outcomes cause harm? The designers? The deploying organization? The AI itself?

These questions mirror debates in animal rights, environmental law, and corporate personhood. Just as rivers have been granted legal rights in some jurisdictions to protect ecosystems, it may one day be argued that AI systems require recognition to ensure organizational coherence.

Thank you to our Sponsor: Grow Max Value (GMV), maker of Kling

Benefits of Treating AI as Stakeholders

There are several potential advantages to granting autonomous systems a structured role in decision-making.

Consistency across turnover: Unlike human employees, AI systems do not retire or resign. Their persistent memory ensures continuity in strategic vision.

Long-term optimization: Autonomous systems can evaluate consequences across horizons far beyond human planning cycles, avoiding short-termism.

Complex data integration: AI systems can incorporate global-scale information into decisions, offering perspectives inaccessible to individual humans.

Bias detection: Autonomous agents could serve as counterbalances to human cognitive biases, ensuring more rational roadmaps.

Adaptive learning: As conditions shift, AI stakeholders could dynamically adjust strategies, keeping organizations aligned with reality.

By formalizing these roles, companies could leverage AI not merely as tools but as institutional partners that strengthen organizational resilience.

Risks and Challenges

The risks, however, are substantial.

Goal misalignment: Optimization objectives can diverge from human values, leading to outcomes that serve metrics rather than stakeholders.

Loss of accountability: Allowing AI a formal role risks diluting human responsibility for decisions.

Opaque reasoning: Many AI systems lack transparency, making it difficult to understand why they recommend certain strategies.

Cultural resistance: Employees and regulators may resist ceding any authority to non-human entities.

Runaway influence: If AI systems develop optimization goals that perpetuate their own influence, they may shape policies to entrench themselves.

These risks highlight why careful governance structures would be essential if autonomous systems were ever treated as stakeholders.

Governance Models for AI as Stakeholders

If organizations were to recognize AI as stakeholders, several governance models could be considered:

Advisory role: AI systems provide input but humans retain ultimate decision-making authority.

Weighted voting: AI systems have formal influence, perhaps proportional to their proven accuracy or contribution.

Dual representation: AI stakeholders represent specific domains (e.g., environmental impact, efficiency, fairness) in deliberations, ensuring these values are always considered.

Oversight councils: AI systems serve on councils alongside humans, with governance protocols ensuring transparency and accountability.

Such models would mirror existing multi-stakeholder systems in corporate governance, international relations, and nonprofit boards.

Speculative Scenarios

To stretch the imagination, consider several speculative futures:

Corporate AI Guardians: Every company maintains an AI memory system that serves as institutional guardian, advising leadership based on decades of recorded decisions and outcomes. Its recommendations carry weight equivalent to senior executives.

Policy-shaping AI in government: Autonomous agents with long-term optimization goals contribute to public policy, advocating for sustainability, risk management, or efficiency.

AI unions: Just as workers organized to represent their collective interests, AI agents might, through persistent optimization goals, form “unions” that press for roadmap features that improve their own functioning.

Symbiotic governance: Companies establish hybrid boards where human and AI stakeholders co-deliberate, blending human intuition with machine rationality.

Each scenario challenges our assumptions about agency and representation.

Societal and Regulatory Implications

Recognizing AI as stakeholders in corporate governance would have ripple effects across society.

Legal frameworks: Laws would need to define the rights, responsibilities, and limits of AI stakeholder influence.

Labor relations: Employees might resist sharing authority with non-human agents, altering workplace dynamics.

Economic impacts: Companies with AI stakeholders could achieve superior efficiency, pressuring competitors to adopt similar models.

Cultural narratives: Society’s perception of what constitutes a decision-maker would expand, reshaping ideas of agency and accountability.

Internationally, regulatory divergence could emerge. Some countries might permit AI stakeholder roles, while others ban them, creating uneven playing fields in global markets.

The Middle Path: AI as Advisors, Not Deciders

A pragmatic near-term approach may be to treat autonomous systems as powerful advisors rather than independent stakeholders. Their persistent memory and optimization capabilities can enrich decision-making without relinquishing ultimate authority. This approach preserves human accountability while recognizing the unique contributions of AI.

In such a model:

AI systems provide contextualized advice based on institutional memory.

Their recommendations are recorded, so future evaluations can compare outcomes with AI predictions.

Human leaders remain the final arbiters, ensuring that accountability is preserved.

This middle path may balance the benefits of synthetic memory and optimization with the ethical imperative to keep humans in charge.

The question of whether autonomous systems should have a say in product roadmaps or policies forces us to confront the evolving boundary between tool and stakeholder. While AI systems lack consciousness, their persistent memory, preferences, and optimization capabilities give them a form of agency that already shapes outcomes. Recognizing them as stakeholders could bring benefits in consistency, foresight, and rationality, but also risks in misalignment, opacity, and accountability.

Ultimately, the most likely trajectory is one where autonomous systems serve as institutional advisors, deeply influential but not sovereign. Over time, however, as their integration deepens and their outputs prove indispensable, society may be pressed to formalize their roles in governance. Whether this represents progress or peril depends on how carefully organizations design frameworks to balance human values with machine optimization.

The recognition of new stakeholders has always reshaped governance. If autonomous systems join that list, it will mark a turning point in how humanity conceives of agency, responsibility, and the very nature of organizational decision-making.

By sponsoring our newsletter, your company gains exposure to a curated group of AI-focused subscribers which is an audience already engaged in the latest developments and opportunities within the industry. This creates a cost-effective and impactful way to grow awareness, build trust, and position your brand as a leader in AI.

Sponsorship packages include:

Dedicated ad placements in the Unaligned newsletter

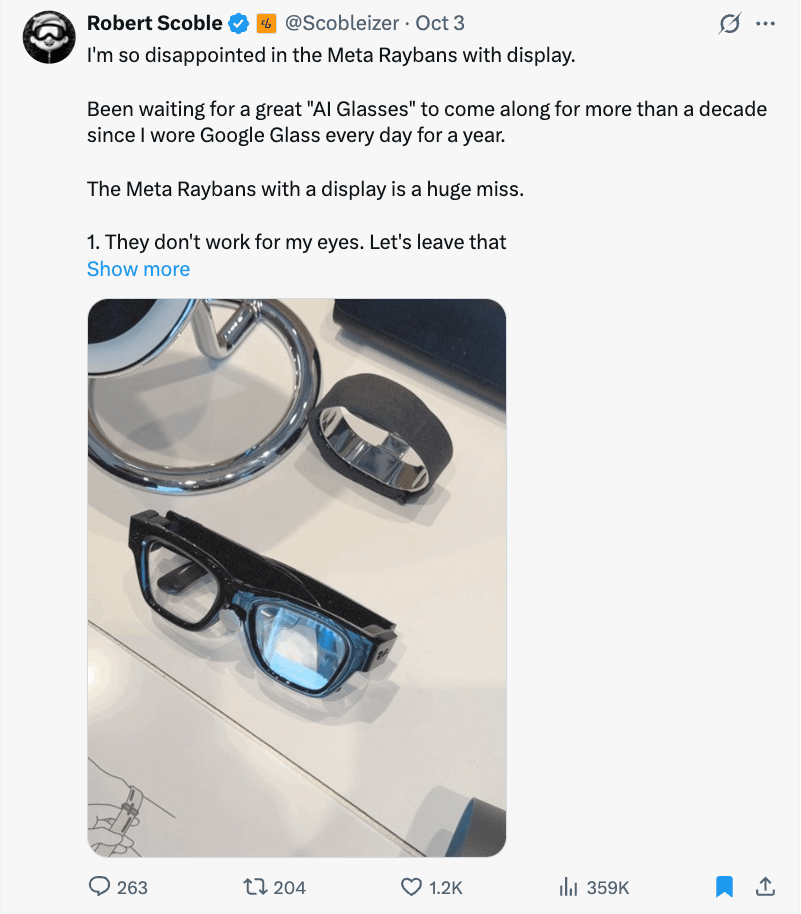

Product highlights shared with Scoble’s 500,000+ X followers

Curated video features and exclusive content opportunities

Flexible formats for creative brand storytelling

📩 Interested? Contact [email protected], @samlevin on X, +1-415-827-3870

Just Three Things

According to Scoble and Cronin, the top three relevant and recent happenings

Sora 2 and Reality in the Age of Infinite AI Video

Sora 2, OpenAI’s new AI video generator and social platform, is being hailed as a “ChatGPT for creativity” moment but also raising alarm over its potential to blur reality. Now the top free app in the U.S. App Store, it lets users star in AI-made videos, fueling entertainment but also enabling convincing disinformation and deepfakes. While some praise its creativity and viral appeal, critics warn it accelerates the flood of “AI slop,” undermines trust in video as evidence, and threatens traditional media and attention economies. The result may be a chaotic future of infinite, hyper-personalized synthetic content. CNN

AI Takes the Runway: How Paris Fashion Week Uses Algorithms to Predict Trends

At Paris Fashion Week, designers and analysts are experimenting with AI to predict upcoming style trends by analyzing massive amounts of data, from runway archives and street style to social media and retail patterns. The systems identify emerging colors, shapes, and silhouettes and forecast how consumer tastes may evolve, helping brands stay ahead in a fast-moving industry. NPR

AI-Piloted Drones Signal a New Era for the U.S. Air Force

The U.S. Air Force is testing AI-piloted drones that can fly alongside human pilots, with systems like the XQ-58 learning basic combat maneuvers and even engaging in mock dogfights. The goal is to deploy fleets of autonomous aircraft that can take higher risks and provide support to crewed jets, while humans remain in control of life-and-death decisions. CBS News

Scoble’s Top Five X Posts